These days, Data and Dev teams face increasingly complex workloads. But what if there were a smart assistant that could manage every step, queue each task in order, and keep everything running smoothly?

That’s why we invited Patsakorn – Platform Services Engineer to share how Apache Airflow 3 has become the go-to companion for Data and Dev professionals — making their work faster, simpler, and a whole lot less stressful.

What is Apache Airflow?

Imagine you’re a Project Manager running a massive project with dozens of tasks — each one depending on another, some can’t start until others finish, and everything must stay on schedule.

That’s exactly what Apache Airflow does — it acts like an automated project manager for your data workflows, keeping every task in order, on time, and fully traceable.

What is a DAG?

DAG stands for Directed Acyclic Graph:

• Directed – tasks flow in one direction (like water running downstream).

• Acyclic – no circular loops (a task never loops back to itself).

• Graph – a visual map showing how tasks connect and depend on each other.

Airflow 3 supports five popular types of DAGs — each designed to help Data and Dev teams build smarter, faster, and more reliable workflows.

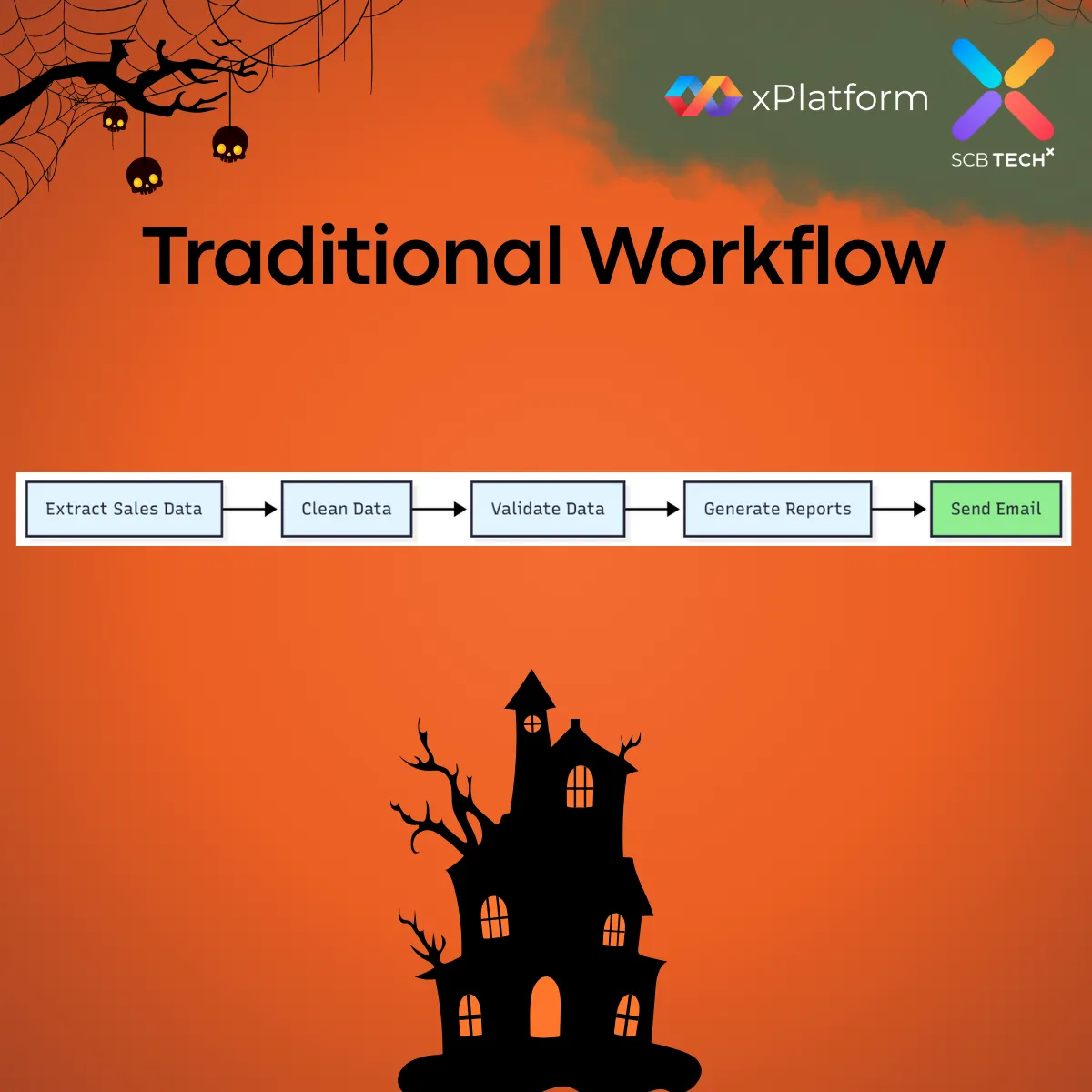

1. Standard DAG (Traditional Workflow)

The most common type — a straightforward sequence of tasks that run in order.

Example: Processing daily sales data → extract from database → clean & validate → generate reports → send email notifications.

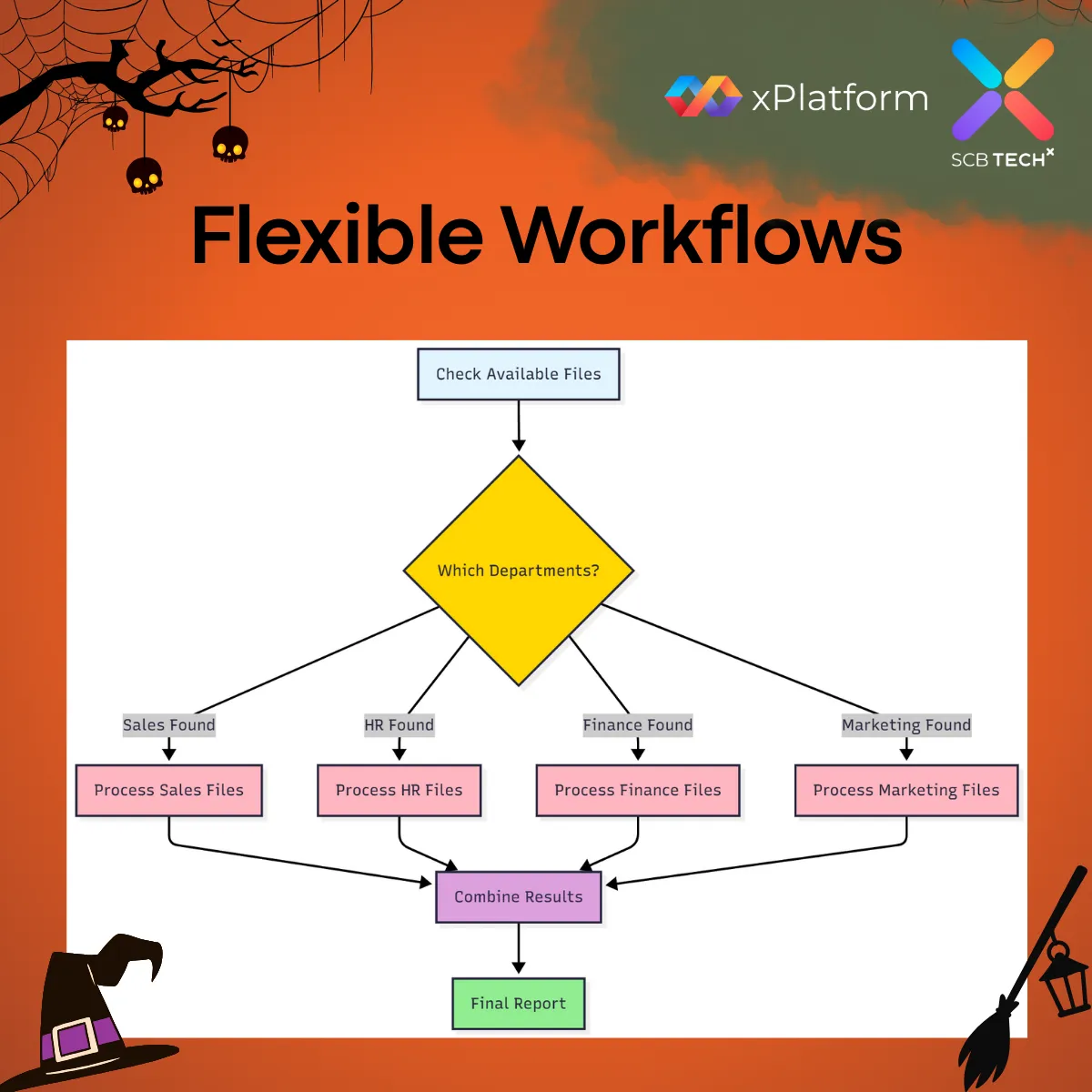

2. Dynamic DAG (Flexible Workflows)

A structure that adapts based on conditions — like a restaurant menu that changes depending on available ingredients. Example: processing files from multiple departments → check which departments uploaded today → create tasks only for those → each department gets its own pipeline.

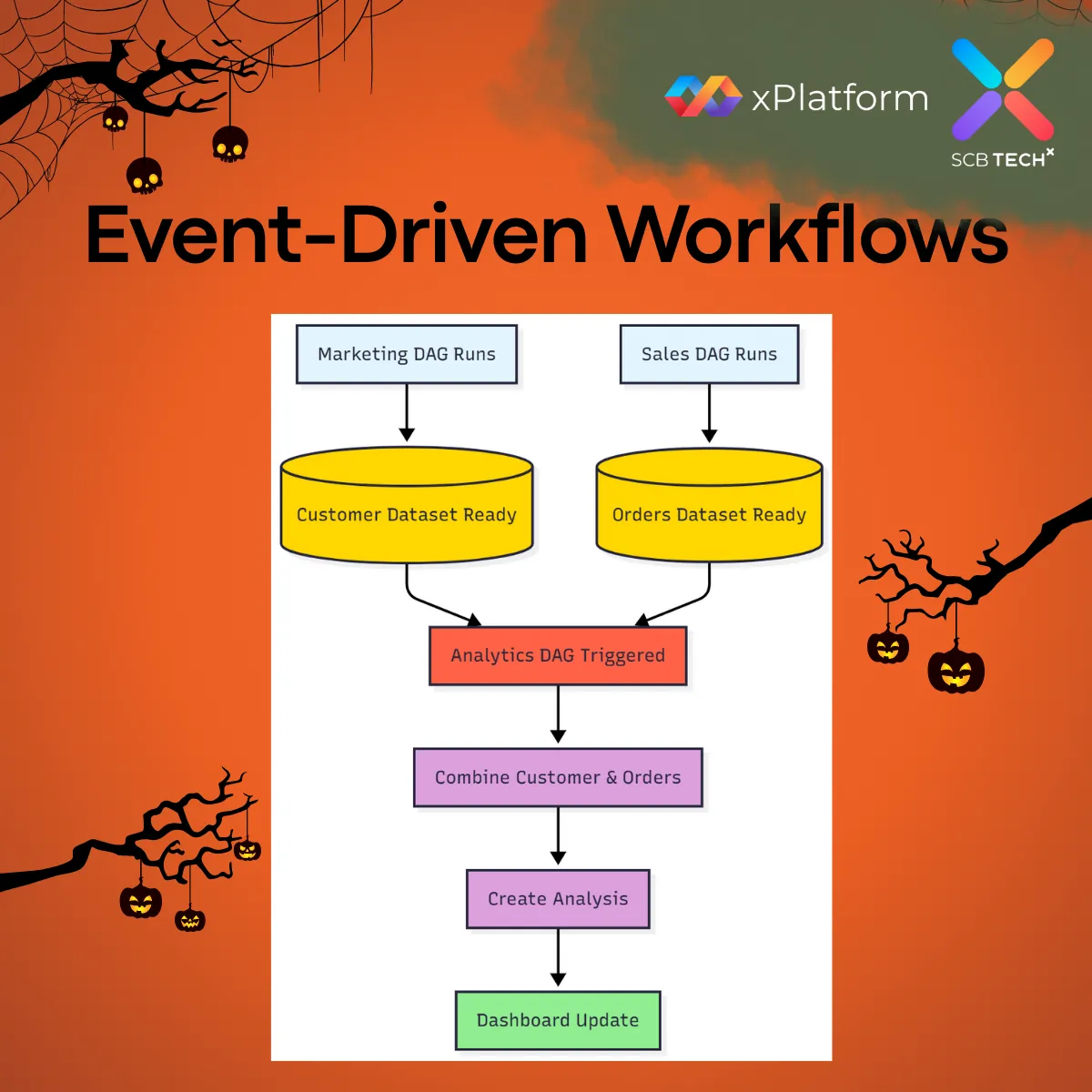

3. Dataset-Triggered DAG (Event-Driven Workflows)

These DAGs don’t run on a schedule — they start when specific data is ready.

Think of a chef who only starts cooking once all the ingredients have arrived.

Example: Marketing DAG creates customer data → Sales DAG waits for that data → Analytics DAG runs only when both datasets are ready.

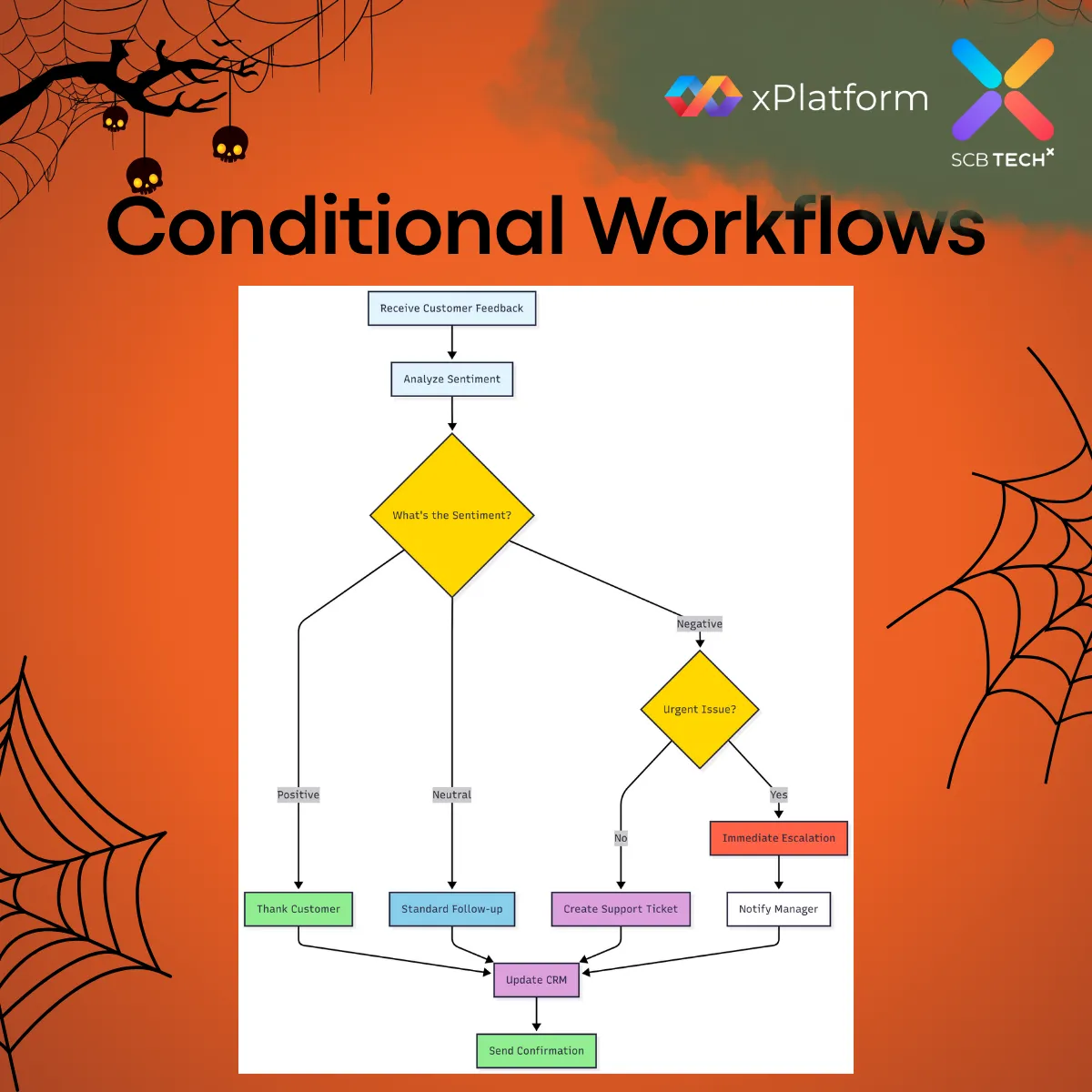

4. Branching DAG (Conditional Workflows)

A decision-based workflow that follows different paths depending on conditions — like a “choose your own adventure” story. Example: processing customer feedback → check sentiment → route to different teams based on results → escalate urgent issues automatically.

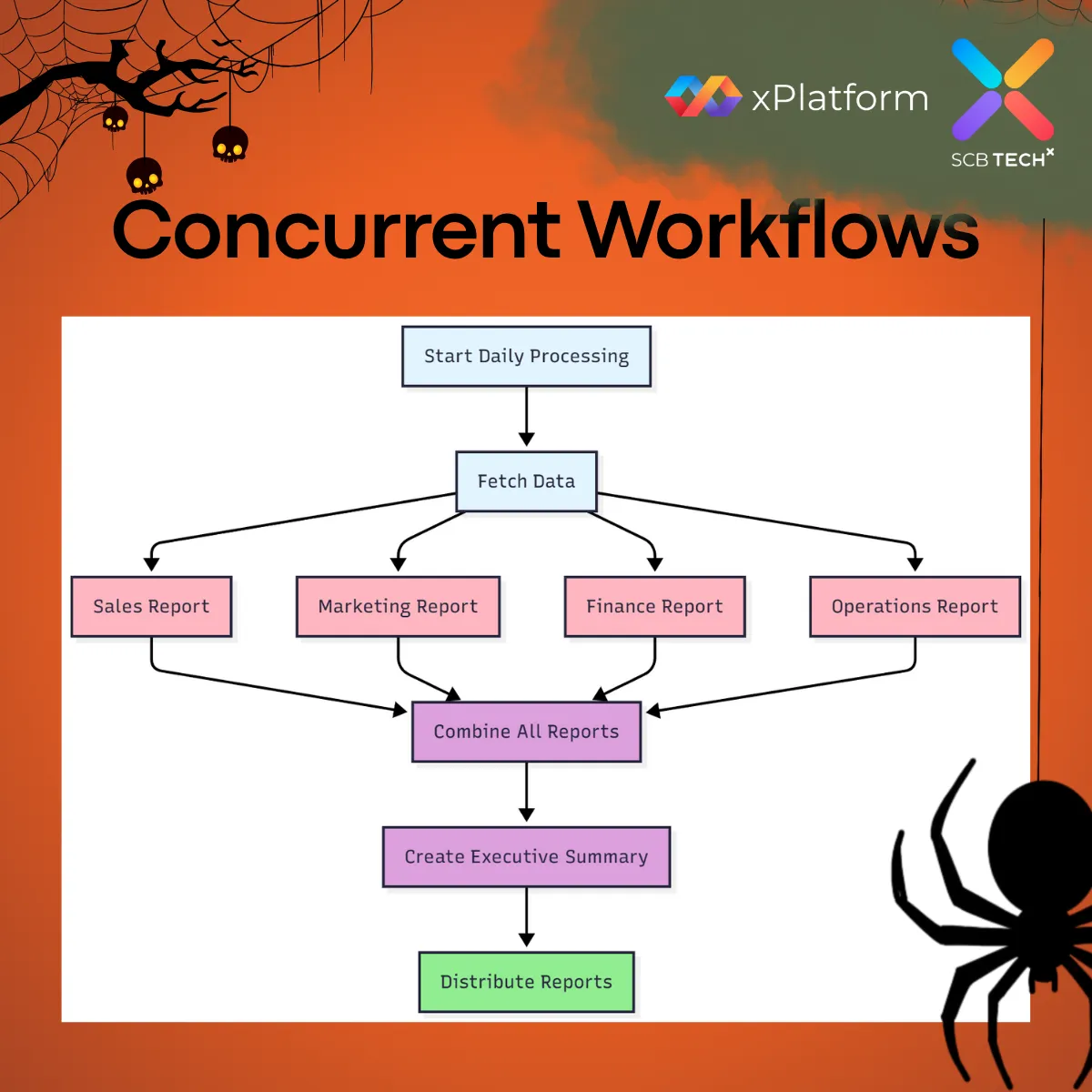

5. Parallel Processing DAG (Concurrent Workflows)

When tasks are independent, they can run simultaneously — like multiple chefs cooking different dishes at once.

Example: generating several reports in parallel → combine results → deliver to stakeholders — all in record time.

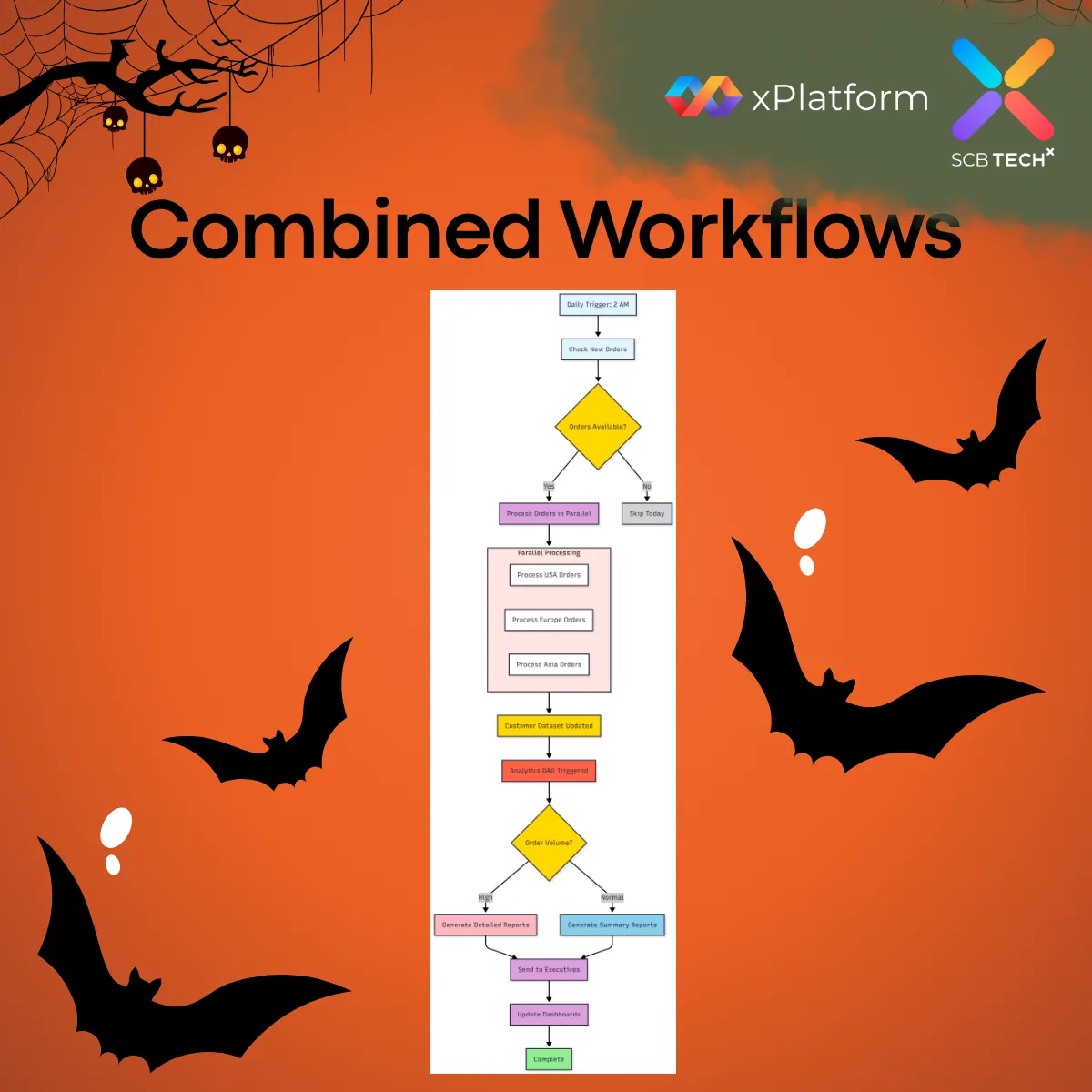

Real-World Example: Combined DAGs in Action

In real applications, workflows often combine several DAG types.

Take an e-commerce daily data pipeline for example — it may include:

• Scheduled Execution (runs daily at 2 AM)

• Branching (checks if there are new orders)

• Parallel Processing (handles different regions simultaneously)

• Dataset-Triggered (analytics starts when customer data is ready)

• Conditional Logic (generates different reports based on order volume)

Key Benefits of Airflow 3 DAGs

1. Automation – Set it and forget it; workflows run automatically.

2. Visibility – Know exactly what’s happening at every step.

3. Reliability – Automatic retries when errors occur.

4. Scalability – Handles everything from simple jobs to complex pipelines.

5. Flexibility – Adapts as your business needs evolve.

Looking for a DevOps solution that automates your workflow and reduces business costs? SCB TechX helps you modernize your delivery pipeline and bring high-quality products to market faster, building a foundation for long-term growth.

For service inquiries, please contact us at https://bit.ly/4etA8Ym

Learn more: https://bit.ly/3H7W9zm