DevOps

DevOps is a set of practices that helps teams deliver software faster, with better quality, and fewer issues between development and operations. But as data, ML, and AI grow rapidly, traditional DevOps workflows with CICD are no longer enough. Managing data pipelines, model training, deployment, and real-time monitoring is far more complex and requires different tools. What stays the same is the DevOps mindset but it must be adapted for data and machine learning. This is where DataOps and MLOps come in. We spoke with Khun Grace, Senior Platform Services Engineer, to give a concise overview

DataOps

applies DevOps principles to data workflows, from ingestion, ETL/ELT, data quality, and versioning, to deploying pipelines with code-level reliability.

MLOps

extends DataOps to manage the ML lifecycle: training, experiment tracking, model registry, deployment, monitoring, and automated retraining.

While DevOps engineers often reference CNCF for CI/CD tools, the DataOps/MLOps ecosystem includes:

• LF AI & Data (MLFlow, Feast, ONNX, Delta Lake)

• AI Infrastructure Alliance tool landscape

• Cloud provider best practices (AWS, GCP, Azure)

• Community resources (MLOps Community, DagsHub, DataOps.live)

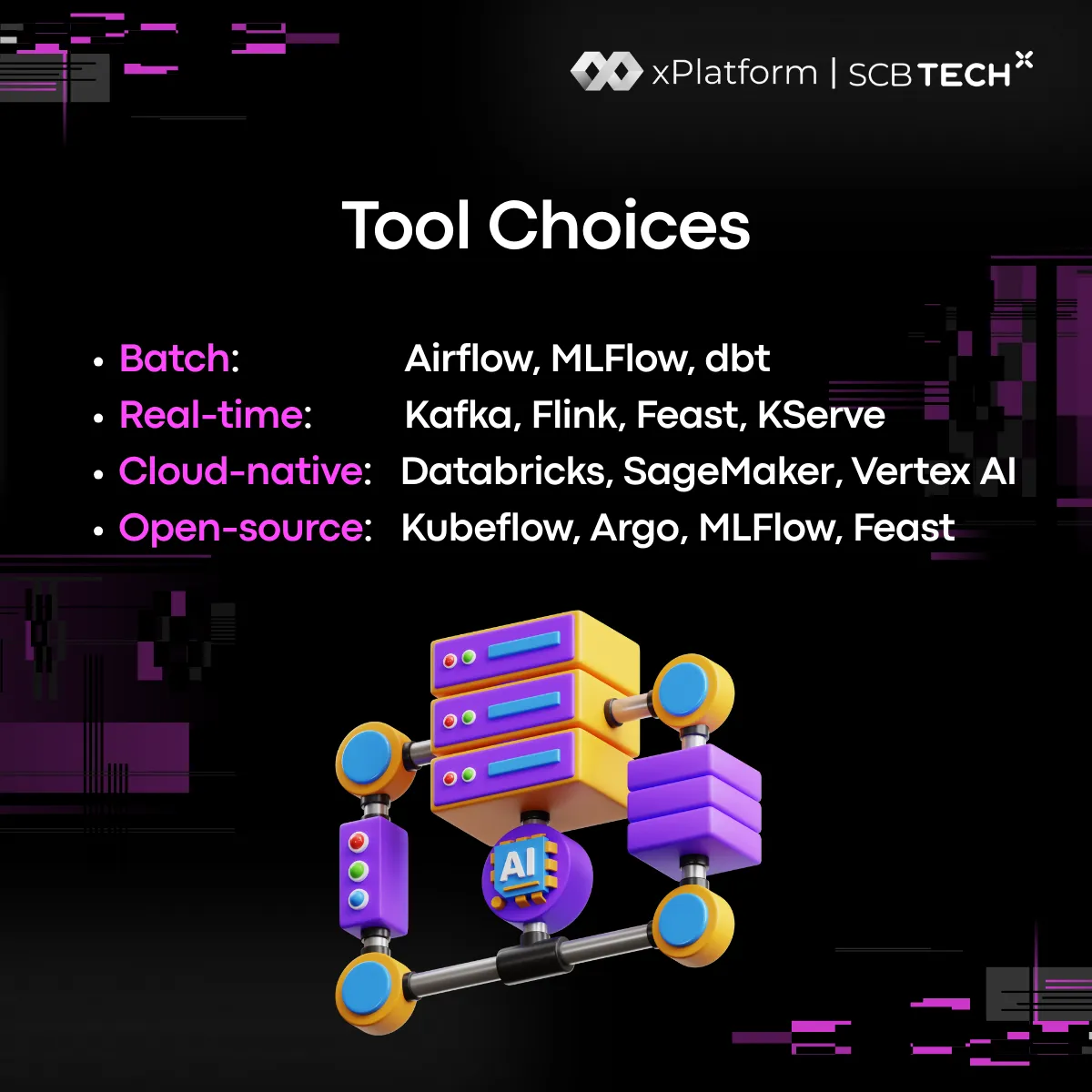

Choosing the right tools depends on your team’s workload:

• Batch pipelines: Airflow, MLFlow, dbt

• Real-time ML: Kafka, Flink, Feast, KServe

• Cloud-native: Databricks, SageMaker, Vertex AI

• Fully open-source: Kubeflow, Argo, MLFlow, Feast

For organizations seeking DevOps solutions to automate workflows, reduce costs, and accelerate delivery, SCB TechX can help you scale your products and services efficiently in the data- and AI-driven era.

For service inquiries, please contact us at https://bit.ly/4etA8Ym

Learn more: https://bit.ly/3H7W9zm